Robotics

Robotics researchers at the Paul G. Allen School of Computer Science & Engineering are engaged in ground-breaking work in mechanism design, sensors, computer vision, robot learning, Bayesian state estimation, control theory, numerical optimization, biomechanics, neural control of movement, computational neuroscience, brain-machine interfaces, natural language instruction, physics-based animation, mobile manipulation, and human-robot interaction. We are currently working to define large-scale joint initiatives that will enable us to leverage our multi-disciplinary expertise to attack the most challenging problems in field.

Research Labs

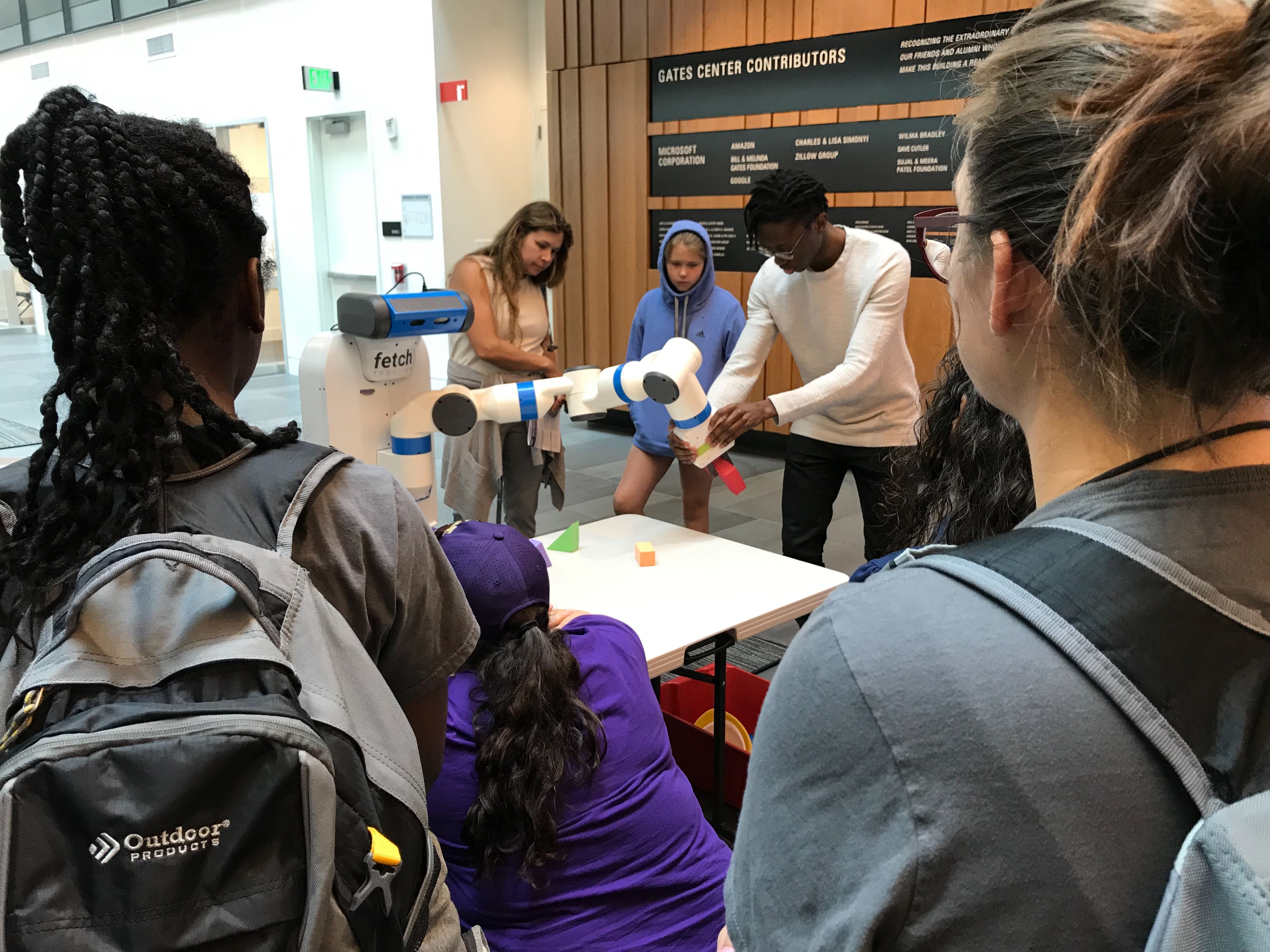

Human-Centered Robotics Lab

In the Human-Centered Robotics lab we aim to develop robotics that are useful and usable for future users of task-oriented robots. To that end we take a human-centered approach by working on robotics problems that would enable functionalities that are most needed to assist people in everyday tasks, while also making state-of-the-art robotics functionalities accessible to users with no technical knowledge. Our projects focus on end-user robot programming, robotic tool use, and assistive robotics.

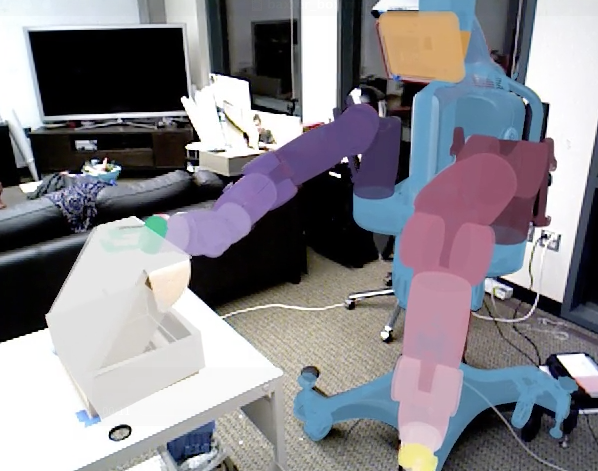

Personal Robotics Lab

The mission of the Personal Robotics Lab is to develop the fundamental building blocks of perception, manipulation, learning, and human-robot interaction to enable robots to perform complex physical manipulation tasks under clutter and uncertainty with and around people.

Robotics and State Estimation Lab

The RSE-Lab was established in 2001. We are interested in the development of computing systems that interact with the physical world in an intelligent way. To investigate such systems, we focus on problems in robotics and activity recognition. We develop rich yet efficient techniques for perception and control in mobile robot navigation, map building, collaboration, and manipulation. We also develop state estimation and machine learning approaches for areas such as object recognition and tracking, human robot interaction, and human activity recognition.

Robot Learning Lab

We perform fundamental and applied research in machine learning, artificial intelligence, and robotics with a focus on developing theory and systems that tightly integrate perception, learning, and control. Our work touches on a range of problems including computer vision, state estimation, localization and mapping, high-speed navigation, motion planning, and robotic manipulation. The algorithms that we develop use and extend theory from deep learning and neural networks, nonparametric statistics, graphical models, nonconvex optimization, quantum physics, online learning, reinforcement learning, and optimal control.

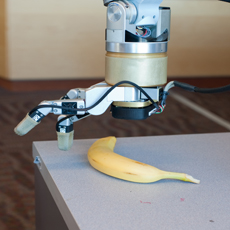

Sensor Systems Lab

In the Sensor Systems Laboratory we invent new sensor systems, devise innovative ways to power and communicate with them, and develop algorithms for using them. Our research has applications in the domains of ubiquitous computing, bioelectronics, and robotics. We have developed a series of pre-touch sensors for robot manipulation. The sensors allow the fingers to detect an object before contact. Proximity information is provided in the local coordinate frame of the fingers, eliminating errors that arise when sensors and actuators are in different frames. In addition to developing the sensors themselves, we are interested in algorithms for estimation, control, and manipulation that make use of the novel sensors. Our primary robotics research platform is our lab's dedicated PR2 robot; we replace the PR2's fingers with our own custom fingers, so that they can be used like sensors native to the PR2. We have worked on robot chess, a robot that solves the rubik's cube, and we have an increasing interest in robots that play games with people.